“Rolling forward” at startup can take a long time, and is not interruptible (after unclean shutdown) #11600

issue rebroad openend this issue on November 3, 2017-

rebroad commented at 3:10 pm on November 3, 2017: contributorbitcond is spending hours “Rolling forward” upon startup, seemingly reprocessing blocks that were previously processed. There have been reports of this happening since July on stackexchange and reddit, so I thought it time an issue was raised, given it’s still happening in V0.15.1.

-

TheBlueMatt commented at 3:16 pm on November 3, 2017: contributorThis happens when bitcoind isnt allowed to shut down cleanly after connecting many blocks with a large dbcache. Ideally we’d have a mechanism for background flushing the cache to disk so that we dont end up in this state, but if you do a full, normal, clean shutdown, this shouldn’t happen.

-

rebroad commented at 3:26 pm on November 3, 2017: contributorit looks like bitcoind does not respond to a kill request during these hours - so it’s not possible to shut down cleanly…

-

TheBlueMatt commented at 3:53 pm on November 3, 2017: contributorAh, well I meant shutdown cleanly prior to the “roll forward”, but, indeed, it looks like there is an issue here in that the “roll forward” doesn’t allow shutdown in the middle of it.

-

laanwj added the label UTXO Db and Indexes on Nov 8, 2017

-

laanwj added the label Validation on Nov 8, 2017

-

laanwj renamed this:

hours spent "Rolling forward"

"Rolling forward" at startup can take a long time, and is not interruptible

on Nov 8, 2017 -

snobu commented at 9:31 pm on November 27, 2017: noneOutside of making sure the system always allows

bitcoindto cleanly exit, is there maybe a workaround for those times the infrastructure just slips from underneath you? -

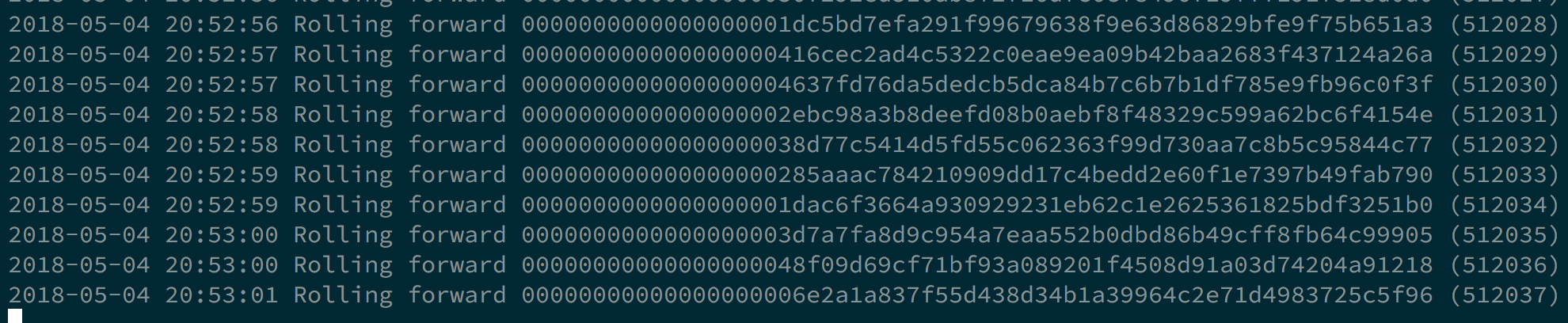

mecampbellsoup commented at 8:54 pm on May 4, 2018: none

In my case, I had a very large

dbcache=6144and left my machine unattended at which point it ran out of battery and had a very un-clean exit 😆So for the past day or so

bitcoindhas been ‘rolling forward’ all the blocks it was processing, most of which resided in the potentially-tainted DB cache:

Since I can’t

bitcoin-cli stopuntil this resync completes, is it safe to killbitcoind, i.e. will I lose all the work that’s been done so far to roll these blocks forward? Or is each block being processed and written to disk (is that the definition of ‘rolling forward’)? Any links to source code would be appreciated! 😄 -

rbndg commented at 1:46 am on May 6, 2018: noneI had the same issue and I did exit and it was fine after I reopened the bitcoind. However bitcoind will continue to roll forward after reopening

-

Sjors commented at 4:39 pm on June 9, 2018: memberI just ran into this rolling forward issue after running out of memory, although when that same machine ran out of memory earlier it just started syncing the regular way from where it left off. This rolling forward also seems to be 5x slower per block than the sync was (update: actually, it speeds up after a few minutes).

-

ccdle12 commented at 9:48 am on June 16, 2018: contributorsame issue, testing a fault tolerant node in the cloud and spinning it back up after a forced crash using a persisted datadir. The resync has to complete before calling bitcoind or bitcoin-cli to stop. Just wondering if there could be a mechanism to improve the resync?

-

StayCoolDK commented at 9:15 am on July 11, 2018: noneI’ve also had this issue. Recently i’ve experienced that it’s getting harder to shutdown Bitcoin Core. The debug.log stops when the mempool is dumped, forcing me to do a “unclean shutdown”, forcing the rolling forwards of blocks. Why is it so hard to shutdown Bitcoin Core cleanly, and why is there no appropriate feedback to what is happening? I’m running it on the newest MacOS, version 0.16.1.

-

Sjors commented at 10:48 am on July 11, 2018: member

How long did you wait? How big is your dbcache? The database cache is flushed to disk after that mempool log message, and it can take a while if you synced many new blocks.

Maybe there should be a log message indicating that it’s ongoing.

-

StayCoolDK commented at 11:18 am on July 11, 2018: noneI waited about an hour. The dbcache is 8000MB. Alright, that’s good to know for future reference, thanks! And i do agree. A log message should indicate that the cache is being flushed to disk.

-

reqshark commented at 3:43 am on September 6, 2018: none

this

bitcoin.conffile has:0dbcache=16384so boring, there’s nothing informative, just hella zero padded hashes all damn day…

so what’s the resolution on this one? HODL your roll forward i guess

-

romainPellerin commented at 2:45 pm on September 21, 2018: noneI concur, we are syncing a node with txindex=1, and it takes forever already. So when the node starts rolling forward it adds up… 1 day to roll forward… This must be fixed since it slows down adoption.

-

romainPellerin commented at 9:03 pm on September 21, 2018: noneFor future users who could face the same issue, we solved it by setting:

dbcache=16384in bitcoin.conf and running our node on an AWS r4.large instance. Solution not accessible to everyone though. -

rraallvv commented at 7:42 pm on October 31, 2018: noneSame problem here, is there some alternative to the official bitcoin daemon? I’m just started to run a full node and had only about 4G of synced data, when the problem showed up, so I’d prefer using something else if possible.

-

mecampbellsoup commented at 8:23 pm on October 31, 2018: none

@rraallvv not to be a jerk but did you Google that?

In addition to btcd, you can use bcoin from purse.io as well: https://bcoin.io/

-

rraallvv commented at 9:54 pm on October 31, 2018: none

@mecampbellsoup Thanks, I’ll give it a try.

I should have pointed out that googling for Bitcoin alternatives isn’t that help full, all I get is spammy posts about alt-coins and ICO offerings. Something similar happened to me when trying to search for different alternatives to the official Ethereum client.

-

vizeet commented at 4:34 am on June 12, 2019: noneI am struck by the same problem. My dbcache is 10G. My battery ran out and it was forward rolling till the point it got stuck. debug.log is not updating anymore. I see chainstate is still rising. Probably I’ll leave the system for couple of hours till chainstate is fully loaded.

-

setpill commented at 8:33 am on August 8, 2019: contributorHow does one shut down

bitcoindcleanly to prevent this? I ran into this problem after callingsystemctl stop bitcoind.service. I am using this bitcoind.service (with a different blocksdir). As far as I understand the changes I made (fixing the initial setup of the config dir permissions) should not affect the waybitcoindis shut down viasystemctl, leading me to believe the providedbitcoind.servicefile from the bitcoin core repo also has this problem. -

glendon144 commented at 11:45 pm on October 29, 2019: noneI find this behavior extremely frustrating. I run several Bitcoin Core pruning nodes, and one of them has been rolling forward for about 24 hours with no status report. I would at least like to see a percent indicator based on what percentage of blocks have been processed. This wouldn’t have to update constantly, updating once every 10 minutes or so would help a lot. I realize that the status of this source code has almost reached religious significance for those of us who are bitcoin maximalists, but I think this would be a great feature to add, if anyone wants to do it. (If it’s not done already by the time you read this.) Thanks!

-

laanwj added the label Resource usage on Oct 30, 2019

-

somewhatnmc commented at 4:48 am on November 30, 2019: none

*somewhat waves!

Naive question, is there an upper bound on the time this rolling forward takes? Could it be more than IBD? Somewhat asks as they may just delete everything and start afresh?

-

currentsea commented at 4:56 am on March 5, 2020: noneThis really needs a solution, the amount of time it is taking me to get a fullnode in a place with non ideal bandwidth is making it next to impossible to want to adopt this platform. There has to be a better solution than needing to wait multiple days on end just to get a full-node working properly wtih a full txindex.

-

StopAndDecrypt commented at 4:58 pm on February 25, 2021: none

Found this after I ungracefully killed my node process during IBD (because I set the dbcache too high).

Ultimately, sometimes the software crashes and this is what needs to happen, but when it does we should be able to interrupt/shut down the client while this “rolling forward” process is happening. QT splash screen says “press q” but pressing q doesn’t do anything, and bitcoind doesn’t shutdown with Control+C. Only kill -9 gets the job done.

I second @glendon144’s suggestion that for QT, since the GUI doesn’t launch until this process completes, perhaps a block height status should be displayed. If it’s rolling forward, display the current height (the logs show it, so the QT splash screen should be able to), and what block height it’s rolling forward to (if possible, not sure about this one). @somewhatnmc: looking at my logs in real time as I type this, and it appears to go through the blocks at an equivalent pace (maybe slightly faster) to when it was originally validating those blocks. Your comment is 1+ year old but I’m responding to it for anyone new who might read this and be curious.

-

sipa commented at 6:24 pm on February 25, 2021: member

Generally the “rolling forward” steps are slower than just normal block processing, because certain optimizations don’t apply. It’s working on an inherently inconsistent state, so some assumptions that are valid during normal processing aren’t possible there. It’s not an optimization - it’s trying to recover from a crash, and the alternative is just starting over from scratch.

Better UX around it would certainly be useful.

-

wakamex commented at 10:31 pm on April 30, 2021: none

i must have messed up my dbcache by trying to delete recent blocks that refused to sync by deleting the last 24hrs of files by date modified in /data/blocks (is there a better way?).

now i’m stuck in the “replaying blocks” and “rolling forward” process that’s uninterruptible without losing all progress. it’s been 2.5 days, and at this rate will take another 2.5 days, on a 3950x with 32gigs of ram. that’s if it doesn’t slow down further, it did the first 88 blocks in 1 second, then hit 0.7s/block at ~280k blocks and is now at 1.15s/block at 475k blocks. i run it with

bitcoin-qt.exe -dbcache=16384 -maxmempool=20000which i saw recommended for initial processing, though it’s using only 7.6gigs of ram. is there a way to speed this up? as it is, it makes me not want to use this client, at least not as a full node. startup log -

mbrunt commented at 10:25 pm on August 13, 2021: noneDoes the general community have a preferred way forward on this? I would be interested in helping but I don’t want to code a solution that is not going to be accepted.

-

sipa commented at 10:38 pm on August 13, 2021: memberThere isn’t really a solution, besides an invasive redesign of the database and cache structure. If you set the dbcache high, and your node crashes in the middle of flushing that to disk, the resulting state will be inconsistent, and fixing that takes time. The alternative is starting over from scratch.

-

mbrunt commented at 11:14 pm on August 13, 2021: noneIf I set the dbcache to 10mb it still wont flush while rolling forward, the final flush at the end crashes my system every time maybe that is another bug to fix. Is it not posable to flush the cache to disk every once in a while? I made a quick change in validation.cpp to test this and it didn’t work out of the box but I may have gotten unlucky and stopped it in a bad spot.

-

sipa commented at 11:17 pm on August 13, 2021: memberRolling forward is what it does to fix the inconsistency, when it is already too late. Changing dbcache won’t change the fact that it’s already inconsistent. Using a smaller dbcache when it first gets inconsistent prevents huge forwards.

-

mbrunt commented at 11:34 pm on August 13, 2021: noneThat makes sense, I just figure it must be possible to save the state of rolling forward. This must be creating an endless loop of long running failures for new people. I will try to have a look at why its crashing when it finishes but that will take a while to re replicate.

-

sipa commented at 11:41 pm on August 13, 2021: memberOh, I see. It may be possible to improve upon that.

-

mbrunt commented at 0:37 am on August 14, 2021: noneI think I have it figured out, just need to allow it to shutdown during roll forward but not during the intermediate flush. The rest “should” mostly work from what I can tell.

-

maximlomans commented at 9:59 pm on September 11, 2021: none

gave this a try. stop bitcoind, lnd, mysql and flush caches # sync; echo 1 > /proc/sys/vm/drop_caches

# sync; echo 2 > /proc/sys/vm/drop_caches # sync; echo 3 > /proc/sys/vm/drop_cachesi can at least reach testnet, set wallet up etc - after being stuck in similar loop, hoping that after reboot i get to mainet …

-

maximlomans commented at 12:07 pm on September 12, 2021: none

Oops, back there on main. it’s not a mem to cache thing - i think it’s reindexing, i never saw the 100% - i did 98% and hit the sack, so i don’t know what happened when it completed or if it actually did on setup . hate having to imagine what it would be like on a pi3b with only 1Gb of ram. i’ll check mem in a couple of hours to see if it could use some freeing up

i’ll have look into mysql optimizations - cache size - watermarks, tables, stack, query, locking etc

-

maximlomans commented at 11:54 am on September 13, 2021: none

it is a process, the rolling forward has now ended and now it’s busy: Syncing txindex with block chain from height xxxxx - what do yous know, patience is still a virtue .. that’s another 3days so far after a 10day blockchain data pull - so the two weeks suggested in docs were spot on - ofcoarse i thought that would be the case for those on an 56Kb adsl connection … root@raspi:~# free -hb total used free shared buff/cache available Mem: 3.7Gi 1.1Gi 38Mi 24Mi 2.5Gi 2.5Gi Swap: 2.0Gi 2.0Gi 0B

root@raspi:

# sync; echo 1 > /proc/sys/vm/drop_caches check freed mem root@raspi:# free -hb total used free shared buff/cache available Mem: 3.7Gi 1.1Gi 1.4Gi 24Mi 1.2Gi 2.5Gi Swap: 2.0Gi 2.0Gi 0.0Kifreeing up cache is all i could do every hour +/-, and wait

-

maximlomans commented at 7:42 pm on September 13, 2021: none

i came out the other end O.K 2weeks , docs said so. I didn’t believe it , thought it would be the case for those on an adsl 56Kb conn. turned out to be the case no matter what , pi4 can only run so hard. 8Gb would’av been better i suppose. all the reboots won’t speed anything up , quit the contrary , it might even corrupt the data. I did use the BTRFS option , which now I’m glad i did. I disabled run from behind tor from menu/settings , and cleared the cache every 2/3hrs the last two days. I exited the mainet and tried testnet ..which it synced successfully within three hrs , that gave me faith in going back to see it to the end on mainet.

Patience still a virtue in 2021

-

saragonason commented at 1:55 pm on March 31, 2024: noneHi, this problem still exists in Version v24.0.1 I did not wait for proper shutdown, because it took to much time. Now i am in Rolling forward state. My Paramters were : bitcoind.exe -maxconnections=250 -datadir=F:\Bitcoin -dbcache=16384

-

willcl-ark commented at 2:26 pm on April 10, 2024: memberThe next step here is to make it interruptable.

-

king-11 commented at 12:36 pm on May 25, 2024: noneI have been encountering this myself the

bitcoindruns inside of a docker container and when its asked to stop and doesn’t respond toSIGTERMthe docker after a timeout usesSIGKILLresulting in exit code of137being unclear stop. Can we attach listener for all the signals somewhere? -

Oztayls commented at 9:52 pm on February 2, 2025: noneAny more on this? I’ve been stuck on 90% for hours. Wondering if a smaller dbcache is more preventative, albeit slower? My internet provider shut off at midnight over the weekend for maintenance, causing this issue.

-

mzumsande commented at 7:56 pm on February 3, 2025: contributor

Any more on this? I’ve been stuck on 90% for hours. Wondering if a smaller dbcache is more preventative, albeit slower? My internet provider shut off at midnight over the weekend for maintenance, causing this issue.

This shouldn’t be caused by internet issues - it happens in case of an unclean shutdown. A smaller dbcache doesn’t prevent the need to roll forward, but it should reduce the time rolling forward takes - as does #30611, after which we would flush more often even if running with a large dbcache.

-

Oztayls commented at 8:30 pm on February 3, 2025: none

Thanks. You are correct, when I looked into it some more, I had set windows to update automatically, and it rebooted during the night. I’ve now turned that feature off, and uninstalled nearly all the software on the machine.

The rolling forward process finally completed last night, but now Bitcoin Core (V27) is not connecting to peers and has not recommenced downloading. Network traffic is zero. I’m now at 54% of the initial download after 1 week. I rebooted it, but it’s not responding. Progress increase per hour says “calculating…”. Should I reinstall Bitcoin Core?

-

Oztayls commented at 10:53 pm on February 3, 2025: none

The rolling forward process finally completed last night, but now Bitcoin Core (V27) is not connecting to peers and has not recommenced downloading. Network traffic is zero. I’m now at 54% of the initial download after 1 week. I rebooted it, but it’s not responding. Progress increase per hour says “calculating…”. Should I reinstall Bitcoin Core?

I managed to resolve this. Updated to V28.1 and reset all settings to default. Downloads started again OK.

One change I noticed was that the port has now changed to 9050. Is this OK?

-

DrahtBot renamed this:

"Rolling forward" at startup can take a long time, and is not interruptible

"Rolling forward" at startup can take a long time, and is not interruptible (after unclean shutdown)

on Feb 4, 2025 -

muaawiyahtucker commented at 10:46 am on April 19, 2025: none

Running Raspberry pi 4, 8GB ram, I put the dbcache at 5500, thinking I was smart and would get a faster sync, but then it crashed at around 833432. I got the error in the log:

ERROR: Commit: Failed to commit latest txindex stateI didn’t understand why it did that, so I tried to reduce the dbcache to 5000, but it seems that when I did that, it ‘unclearly restarted’, because when it rebooted, I got this rolling business. It is slow, but for me, every time it got to a certain point, near where it initially got to (but not the same block each time) it spontaneously restarts bitcoind and restarts the rolling.I’ve since reduced the dbcache to 3000 (which is the default on my system) and am monitoring it closely.

-

achow101 closed this on May 1, 2025

-

achow101 referenced this in commit 5b8046a6e8 on May 1, 2025

-

andrewtoth commented at 7:14 pm on May 1, 2025: contributorWoohoo!

This is a metadata mirror of the GitHub repository bitcoin/bitcoin. This site is not affiliated with GitHub. Content is generated from a GitHub metadata backup.

generated: 2026-03-03 03:13 UTC

More mirrored repositories can be found on mirror.b10c.me