In validation.cpp, both ConnectTip and DisconnectTip invoke a GetMainSignals()... callback, which schedules a future event though the CScheduler interface.

These functions are called in 3 places:

ActivateBestChainTipStepInvalidateBlockRewindBlockIndex

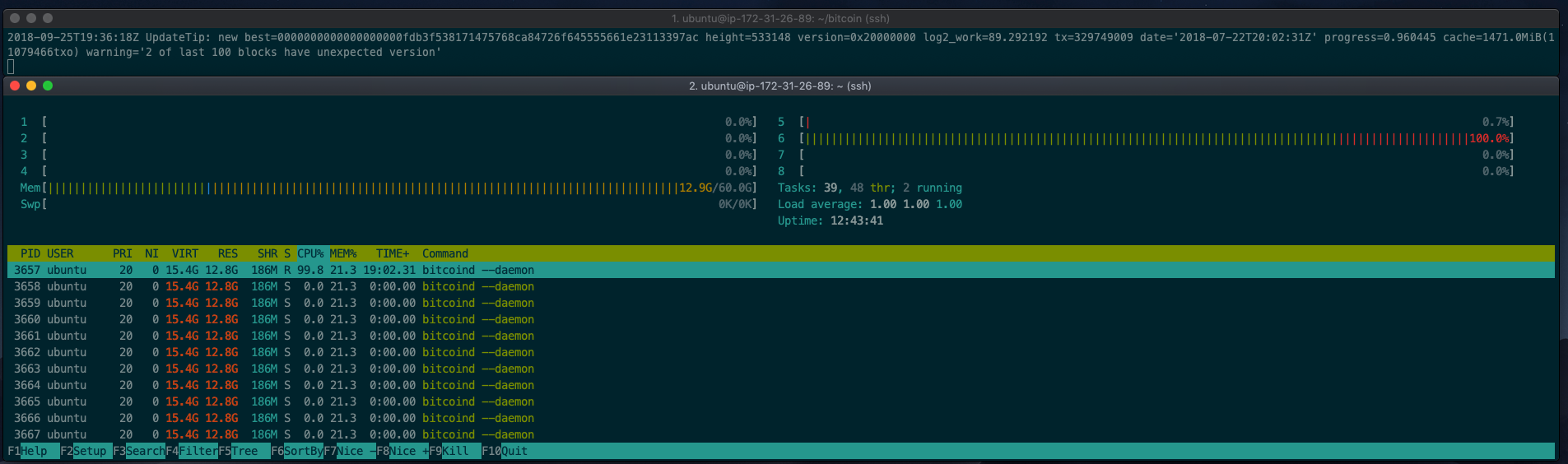

The first of these 3 prevents the scheduler queue from growing unboundedly, by limiting the size to 10 in ActivateBestChainTip. The other two however do not, and @Sjors discovered that doing a giant invalidateblock RPC call will in fact blow up the node’s memory usage (which turns out to be caused by the queue events holding all disconnected blocks in memory).

I believe there are several improvements necessary.

-

(short term symptom fix) If this issue also appears for

RewindBlockIndex, we must fix it before 0.17, as this may prevent nodes from upgrading pre-0.13 code. I think this is easy, as this function is called prior to normal node operation, so it can easily be reworked to releasecs_mainintermittently and drain the queue. -

(long term fix) The use of background queues is a means to increase parallellism, not a way to break lock dependencies (as @gmaxwell pointed out privately to me, whenever a background queue is used to break a dependency, attempts to limit its size can turn into a deadlock). One way I think is to have a debug mode where the background scheduler immediately runs all scheduled actions synchronously, forcing all add-to-queue calls to happen without locks held. Once everything works in such a mode, we can safely add actual queue size limits as opposed to ad-hoc checks to prevent the queue from growing too large.

-

(orthogonal memory usage reduction) It’s unfortunate that our

DisconnectBlockevents hold a shared pointer to the block being disconnected. Ideally there is a shared layer that only keeps the last few blocks in memory, and releases and reloads them from disk when needed otherwise. This wouldn’t fix the unbounded growth issue above, but it would reduce the impact. It’s also independently useful to reduce the memory during very large reorgs (where we can’t run callbacks until the entire thing completed).